In the world of data science, neural networks have become a go-to approach for tackling complex problems. But while pre-built libraries like TensorFlow or PyTorch make it easy to implement these models, there's something particularly rewarding — and educational — about building a neural network from scratch. I recently took on this challenge, using a weather dataset from Kaggle to create a neural network with ReLU activation, leveraging only pandas and numpy.

Why Build from Scratch?

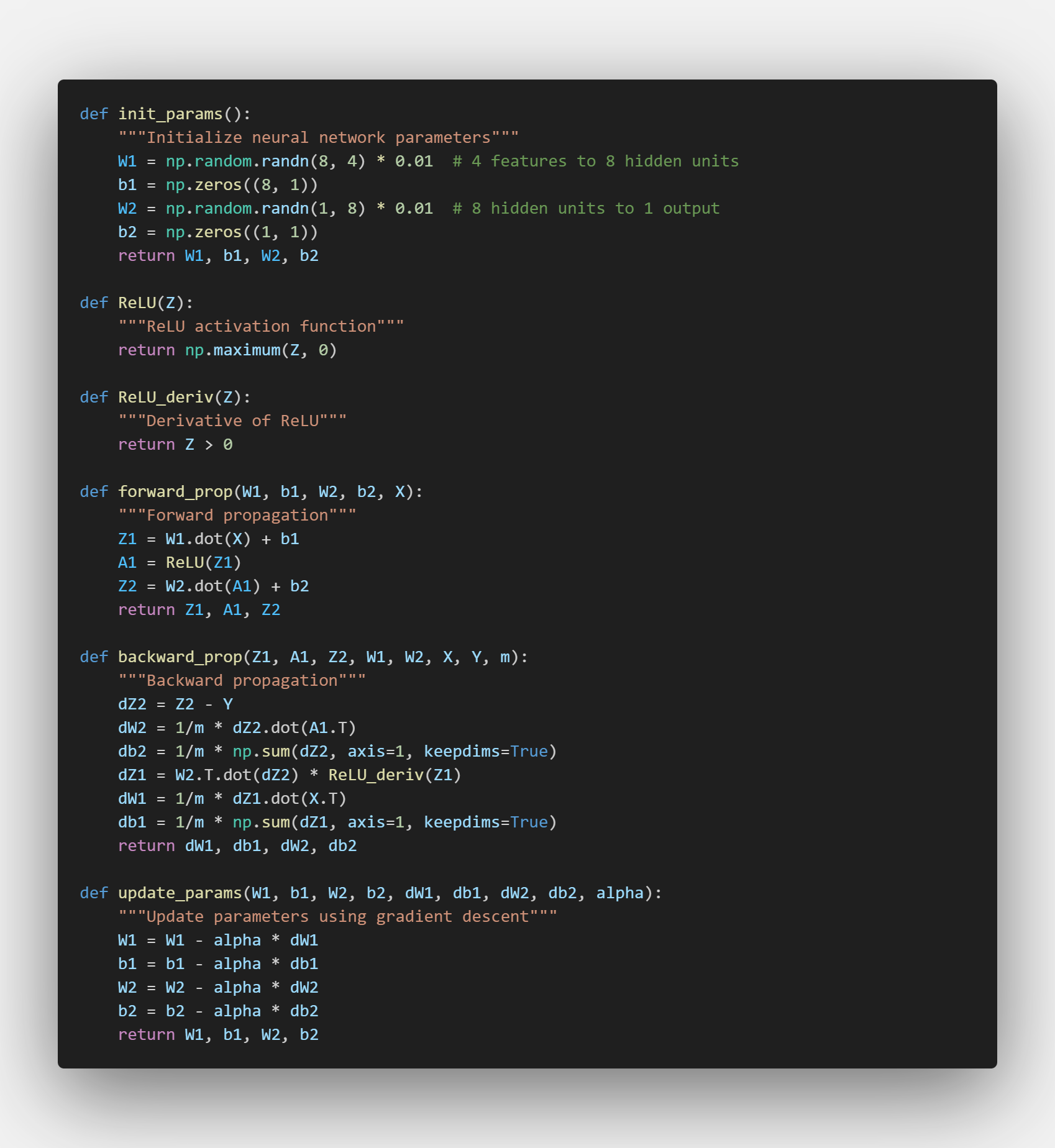

Constructing a neural network manually allows you to understand each moving part: weight initialization, forward propagation, activation functions, loss calculation, and backpropagation. When you implement each of these, you begin to appreciate the inner workings of these algorithms and how they handle data patterns.

Getting Started

Using just pandas and numpy, I built a basic neural network with:

- Layers: The network has an input layer, some hidden layers, and an output layer.

- Activation Function: ReLU (Rectified Linear Unit), chosen for its simplicity and efficiency in reducing vanishing gradient problems.

- Learning Rate (α): Set to 0.01, which I found balanced learning speed with convergence.

- Iterations: 1,000 epochs to iteratively minimize the error and optimize the weights.

Key Steps

1. Initialize Weights and Biases: Random values are assigned to start, which are adjusted over time to minimize error.

2. Forward Propagation: Data flows through each layer, applying weights and biases, and using ReLU for activation.

3. Compute Loss: Using a mean squared error function, I calculated the difference between predicted and actual values.

4. Backpropagation: This phase calculates gradients to adjust weights, ensuring the network learns the patterns in the dataset.

Results

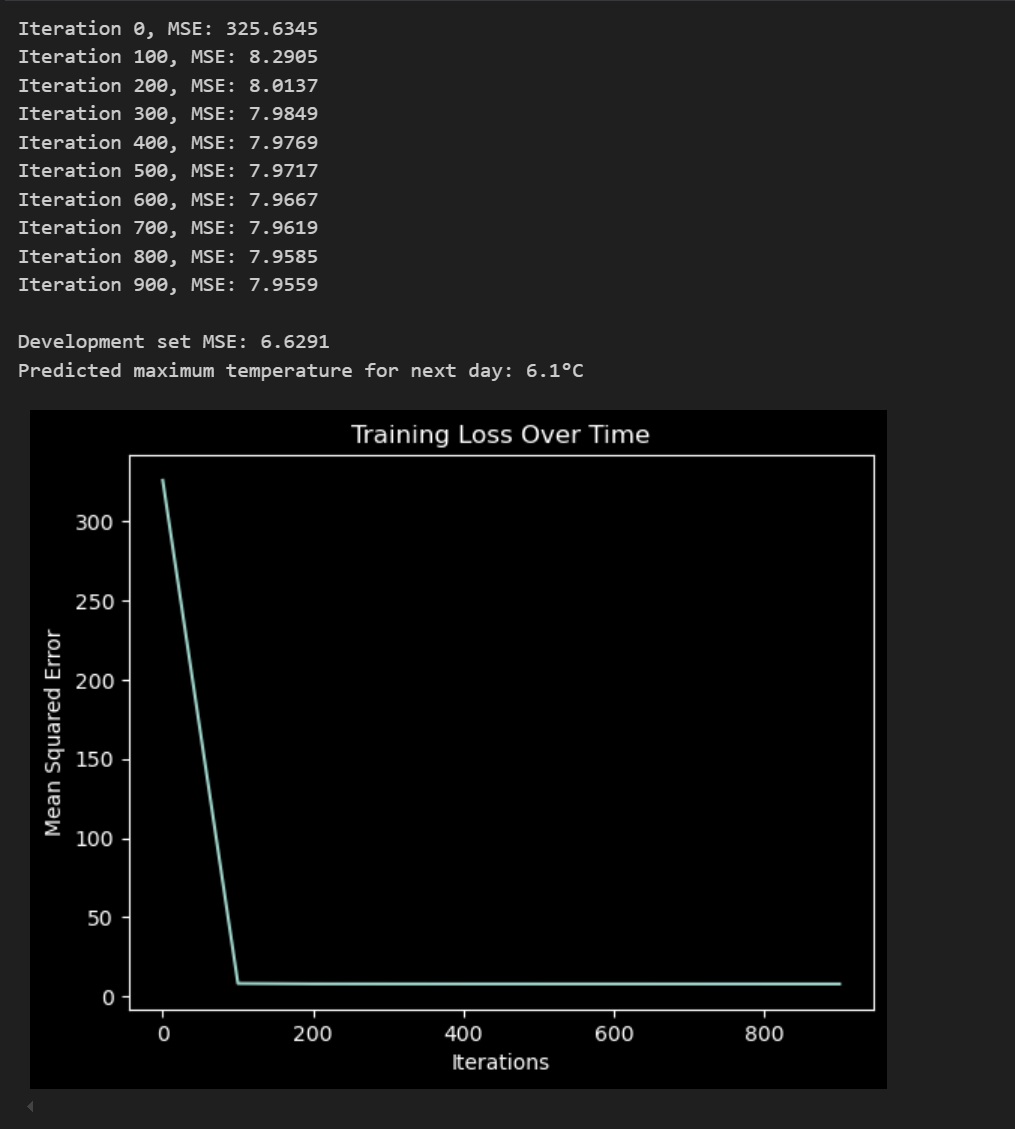

After 1,000 iterations, the network demonstrated a reasonable approximation of the target values in the dataset. Below is a snapshot of the progress — note how the model begins to align with the expected results over time.

Building this from scratch was both challenging and rewarding, and it provided valuable insights into how neural networks learn and adapt. If you're looking to deepen your understanding of machine learning, I highly recommend this exercise.